Islam Mohamed

Mixed Precision Training

A High-Level Overview

Deep Neural Networks (DNNs) have achieved breakthroughs in several areas, including Computer Vision, Natural Language Understanding, Speech Recognition tasks, and many others.

Although increasing network size typically improves accuracy, the computational resources also increase (GPU utilization, Memory).

So new techniques have been developed to train models faster ( without losing accuracy or modifying the network hyper-parameters) by lowering the required memory which will enable us to train larger models or train with larger mini-batches.

In neural nets, all the computations are done in a single precision floating point.

Single precision floating point arithmetic deals with 32-bit floating point numbers which means that all the floats in all the arrays that represent inputs, activations, weights .. etc are 32-bit floats (FP32).

So an idea to reduce memory usage by dealing with 16-bits floats which called Half precision floating point format (FP16). But Half precision had some issues which localized in its small range and low precision, unlike single or double precision, floats. And for this reason, half precision sometimes won’t able to achieve the same accuracy.

So with Mixed precision which uses both single and half precision representations will able to speed up training and achieving the same accuracy.

Problems In Half Precision

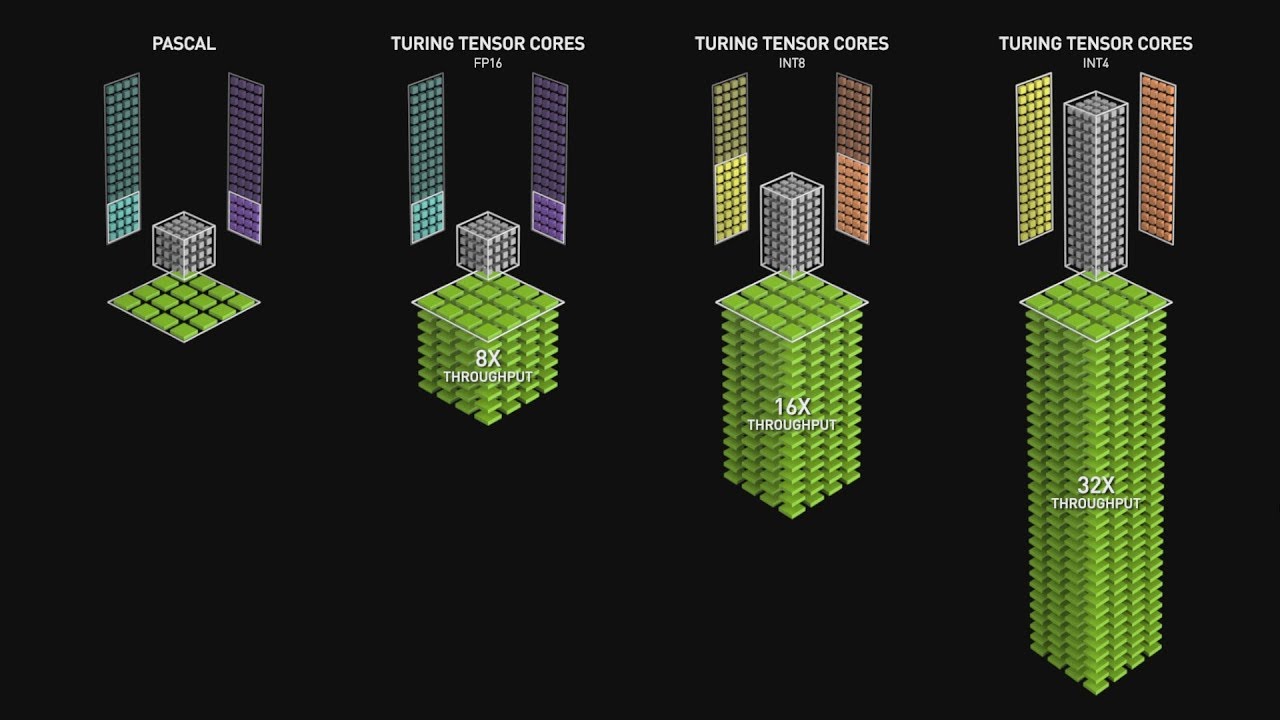

To understand the problems in half precision, let’s have a look what an FP16 looks like :

Fig. 1 : half precision floating point format.

Divided Into three Segments

- The bit number 15 is the sign bit.

- The bists for 10 to 14 are the exponent.

- The final 10 bits are the fraction.

The values for this representation is calculated as shown below

Fig. 2 : half precision floating point formatt with value for bits.

- If the exponent bits is ones (11111), then the value will be NaN (“Not a number”).

- If the exponent bits is zeros (0000), then the value will be a subnormal number and calculated by :

(−1) ^ signbit × 2 ^ −14 × 0.significantbits_base_2 - Otherwise the value will be a normalized value and calculated by :

(−1) ^ signbit × 2 ^ exponent value − 15 × 1.significantbits_base_2

Based on half precision floating point methodology if we tried to add 1 + 0.0001 the output will be 1 because of the limited range and aligning between 1 and 0.0001 as shown in this answer.

And that will cause numbers of problems while training DNNs, For trying and investigation through conversion or adding in binary 16 float point check this site.

The main issues while training with FP16

- Values is imprecise.

- Underflow Risk.

- Exploding Risk.

Values is imprecise

In neural Network training all weights, activations, and gradients are stored as FP16.

And as we know updating weights is done based on this equation

New_weight = Weight - Learning_Rate * Weight.Gradient

Since Weight.Gradient and Learning_Rate usually with small values and as shown before in half precision if the weight is 1 and Learning_Rate is 0.0001 or lower that will made freezing thrugh weights value.

Underflow Risk

In FP16, Gradients will get converted to zero because gradients usually are too low.

In FP16 arithmetic the values smaller than 0.000000059605 = 2 ^ -24 become zero as this value is the smallest positive subnormal number and for more details investigate here.

With underflow, network never learns anything.

Overflow Risk

In FP16, activations and network paramters can increase till hitting NANs.

With overflow or exploding gradients, network learns garbage.

The Proposed Techniques for Training with Mixed Precision

Mainly there are three techniques for preventing the loss of critical information.

- Single precision FP32 Master copy of weights and updates.

- Loss (Gredient) Scaling.

- Accumulating half precision products into single precision.

Single precision FP32 Master copy of weights and updates

To overcome the first problem we use a copy from the FP32 master of all weights and in each iteration apply the forward and backward propagation in FP16 and then update weights stored in the master copy as shown below.

Fig. 3 : Mixed precision training iteration for a layer.

Through the storing an additional copy of weights increases the memory requirements but the overall memory consumptions is approximately halved the need by FP32 training.

Loss (Gredient) Scaling

- Gradient values with magnitudes below 2 ^ -27 were not relevant to training network, whereas it was important to preserve values in the [2 ^ -27, 2 ^ -24] range.

- Most of the half precision range is not used by gradients, which tend to be small values with magnitudes below 1. Thus, we can multiply them by a scale factor S to keep relevant gradient values from becoming zeros.

- This constant scaling factor is chosen empirically or, if gradient statistics are available, directly by choosing a factor so that its product with the maximum absolute gradient value is below 65,504 (the maximum value representable in FP16).

- Of course, we don’t want those scaled gradients to be in the weight update, so after converting them into FP32, we can divide them by this scale factor (once they have no risks of becoming 0).

Accumulating half precision products into single precision

After investigatin through last issue found that the neural network arithmetic operations falls into three groups: vector dot-products, Reductions and point-wise operations.

These categories benefit from different treatment when it comes to re-duced precision arithmetic.

- Some networks require that the FP16 vector dot-product accumulates the partial products into an FP32 value, which is then converted to FP16 before storing.

- Large reductions (sums across elements of a vector) should be carried out in FP32. Such reductions mostly come up in batch-normalization layers when accumulating statistics and softmax layers.

- Point-wise operations, such as non-linearities and element-wise matrix products, are memory-bandwidth limited. Since arithmetic precision does not impact the speed of these operations, either FP16 or FP32 math can be used.

Mixed Precision Training Steps

- Maintain a master copy of weights in FP32.

- Initialize scaling factor (S) to a large value.

- For each iteration:

3.1 Make an FP16 copy of the weights.

3.2 Forward propagation (FP16 weights and activations).

3.3 Multiply the resulting loss with the scaling factor S.

3.4 Backward propagation (FP16 weights, activations, and their gradients).

3.5 If there is an Inf or NaN in weight gradients:

3.5.1 Reduce S.

3.5.2 Skip the weight update and move to the next iteration.

3.6 Multiply the weight gradient with 1/S.

3.7 Complete the weight update (including gradient clipping, etc.).

3.8 If there hasn’t been an Inf or NaN in the last N iterations, increase S.

“now native”, “is supported”, “PyTorch” (replace all), point: drawbacks/points, “like””

Mixed Precision APIs

- NVIDIA developed Apex as an extension for easy mixed precision and distributed training in Pytorch to enable researchers to improve training their models.

- But now native automatic mixed precision is supported in pytorch to replace Apex.

- Apex drawback :

- Build extensions

- Windows not supported

- Don’t guarantee Pytorch version compatibility

- Don’t support forward/backward compatibilty (Casting data flow)

- Don’t support Data Parallel and intra-process model parallelism

- Flaky checkpointing

- Others

Mixed Precision In Frameworks

- torch.cuda.amp fixes all of these issues, the interface became more flexible and intuitive, and the tighter integration with pytorch brings more future optimizations into scope.

- So No need now to compile Apex.

- See Automatic Mixed Precision in TensorFlow for Faster AI Training on NVIDIA GPUs.

Automatic Mixed Precision package - Pytorch

- torch.cuda.amp provides convenience methods for running networks with mixed precision, where some operations use the torch.float32 (float) datatype and other operations use torch.float16 (half) as we have shown before.

- Till now Pytorch is still developing an automatic mixed precision package but Gradient Scaling (GradScaler class) is ready and stable for usage.

- This Package mainly use torch.cuda.amp.autocast and torch.cuda.amp.GradScaler modules together.

- torch.cuda.amp.GradScaler is not a complete implementation of automatic mixed precision but useful when you manually run regions of your model in float16.

- If you aren’t sure how to choose operation precision manually so you have to use torch.cuda.amp.autocast which serves as context managers or decorators that allow regions of your script to run in mixed precision (NOT Stable Yet).

Note : If you trained your model in FP32 and while testing called model.half(), Pytorch will convert all the model weights to half precision and then proceed with that.

<br

References

- Mixed Precision Training.

- Training Mixed Precision User Guide.

- Introduction For Mixed Precision Training By Fastai.

- Apex Pytorch Easy Mixed Precision Training.

- Automatic Mixed Precision in TensorFlow.

- Automatic Mixed Precision Package In Pytorch.